Is It Time to Fuse Supply Chain and IT?

AI, in principle, is supply chain’s next great digital leap forward. But the data-sensitivity of AI/ML models calls for much tighter and more continuous collaboration between business and IT functions than in previous waves of digital innovation. MLOps is a key part of the solve. But it may also portend a more fundamental organizational shift.

HP co-founders Bill Hewlett and David Packard created the first product manager (PM) role as far back as 1945. The vision was to create a single locus of product ownership, strengthen integration between marketing and development teams to improve product quality, and speed up time-to-market. They were ahead of their time.

60 years later, digital pioneers like Google and Netflix leveraged the same PM principles combined with Toyota-inspired agile methods to accelerate digital product development cycles by as much as 4x, cut costs by 25-50%, improve quality by 50%, and increase employee engagement by upwards of 30%.

PM roles are now common in supply chain, too. In the 12 months ending July 2023, 65% of companies in Zero100’s 174-brand data set hired at least one supply chain product manager. Siemens, Cardinal Health, Roche, and Amazon led the pack with over 100 supply chain PM job postings each.

AI Situation, Complication

AI, in principle, is supply chain’s next great digital leap forward. Indeed, a Zero100 crosstab of LinkedIn job postings vs financial performance across 100 global brands found that supply chain leaders investing most heavily in recruitment of AI talent saw a 1.7x increase in revenue and 1.5ppt gross margin lift compared to their industry peers.

But AI/ML models are also much more data-fragile than traditional software. In traditional software engineering, a well-designed application can and should stay stable while its data inputs evolve. With classic and generative AI, models and dynamic data must stay current with the business environment and flow in sync.

The negligent alternative is called model “drift,” a divergence between a model and the environment for which it is optimized. It’s why, 18 months after a global pandemic, much of the retail and fashion sector was still digging out of a 30%+ inventory overhang. When demand plateaued due to inflation in early 2022, data inputs for otherwise beautifully designed ML models were built on data from the previous 3-6 months. A bit of inattention led to a lot of disaster.

But the miss is understandable. A second-order challenge with modeling is data complexity. The average AI leader has hundreds of AI models running in parallel across interdependent functions and teams at any one moment. Driving value requires exponentially more data wrangling and model training than in our bygone digital era. Open AI reports that 92% of Fortune 500 companies are at least experimenting with ChatGPT. One CSCO interviewed for our recent AI report indicated as much as 90% of his company’s AI/ML models sit on the shelf, unused due to a lack of data upkeep. Others like Samsung have blocked internal ChatGPT use amongst employees, having been burned by recent generative AI security breaches. It’s still the AI Wild West.

MLOps to the Rescue?

One promising solve for the complexity problem may be MLOps (and its more recent generative AI cousin, LLMOps).

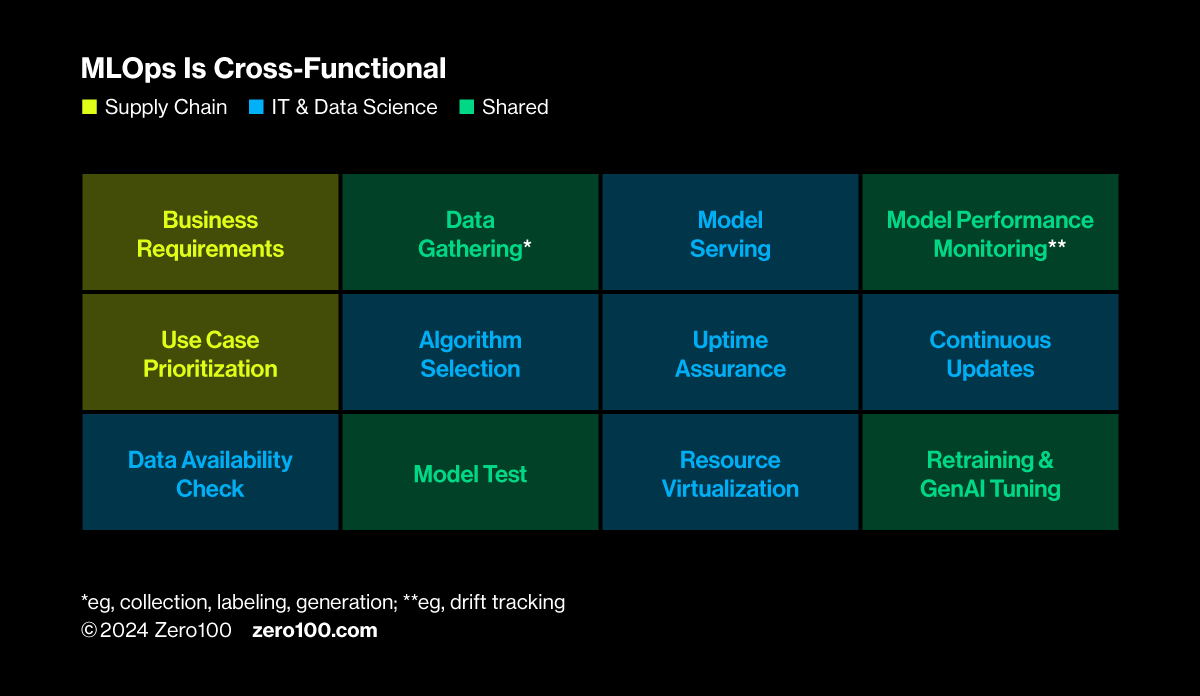

Acknowledging the dynamism of ML models in 2017, AI leaders like Amazon and Schneider Electric pioneered a digital software PM and “DevOps” variant that leverages PM principles but adds a more continuous and intensive collaboration between data science teams, engineers, and their business counterparts.

The idea is simple but elegant – as the pace of data ingestion and model tuning picks up, include more stakeholders to ensure all are on the same page, fast. And, given supply chain’s front-row seat on shifts in the business environment, place supply chain stakeholders directly at the MLOps table.

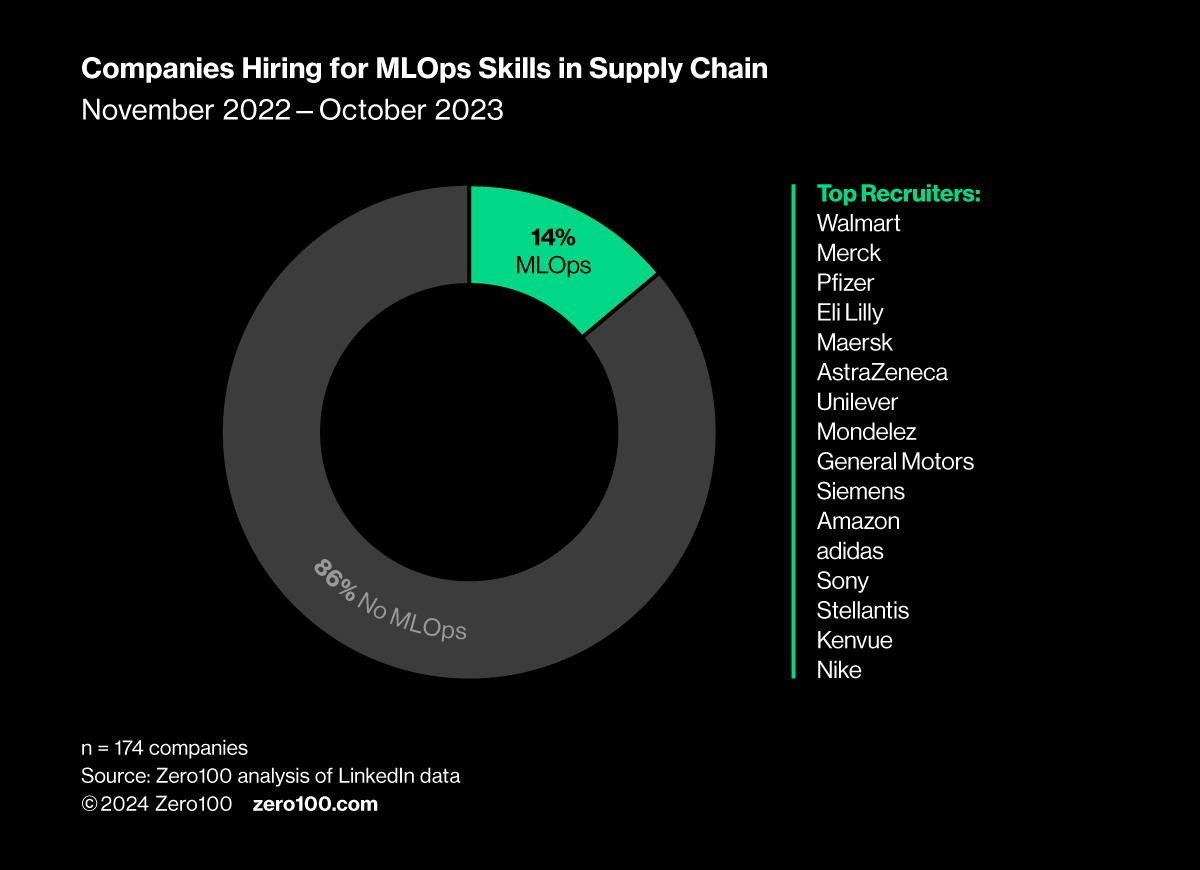

Albeit early days, AI leaders like Walmart, Merck, and Maersk are putting their money where their mouth is, hiring MLOps skills directly into supply chain positions. Our review of LinkedIn job posts among 174 major global brands indicates that 14% have already taken the MLOps plunge.

Encouragingly, new MLOps and LLM Ops tools are also radically streamlining collaboration. So-called “AIOps” describes a new portfolio of process assistants that automate work-intensive tasks at each stage of the ML model lifecycle. Some report modeling efficiency gains of as much as 50%. Increasingly, MLOps is both light-touch and hugely impactful.

Seismic Implications for the Future of Work

There is no longer any doubt that the ROI for AI talent is exceptional. The problem is that the pioneers are far too few. One 2023 Zero100 study survey found just 11% of supply chain leaders have scaled AI beyond the pilot phase.

Meanwhile, first movers race ahead, capitalizing on AI’s built-in network and learning effects, broadening the digital divide. The damage may be unrecoverable for those who wait.

The knowing-doing gap is understandable. Matrixed organizations combined with legacy hands-off attitudes towards the IT function make the scaling problem seem insoluble. Per one member of our leadership community, “we have more pilots than Lufthansa.”

New collaboration mechanisms like MLOps may be our new path through. But, the emergence of interdependent MLOps and data platforming processes also suggests we ought to be having a more fundamental conversation about the future relationship between supply chain and IT.

Are we slow-walking into an inevitable merger? Are we missing an opportunity to integrate further still? Are we fighting against ourselves by insisting on functional specialization that has passed its due date? We think the answer may be yes. We just need to step back to see it.

Let’s.