People-First Transformation and the AI Flywheel

Whether generative or discriminative, AI tools are meaningless without a question to answer. To successfully transition to digital supply chains, people need to actively lead the transformation – and technology will learn to follow their lead.

I broke my Pixel phone this week and nearly had a heart attack realizing how crippled I suddenly was without my tech. My first call for help went to a chatbot. Dead end.

The second call went to an amazing human – Didi, from AT&T customer service. Her patient yet quick maneuvering through Google Cloud and assorted devices got me out of a self-inflicted disaster. The experience reinforced a principle I often hear from supply chain leaders who truly understand how to make digital transformation happen: think “People First.”

Digitization, Decarbonization, and the Path to Zero100

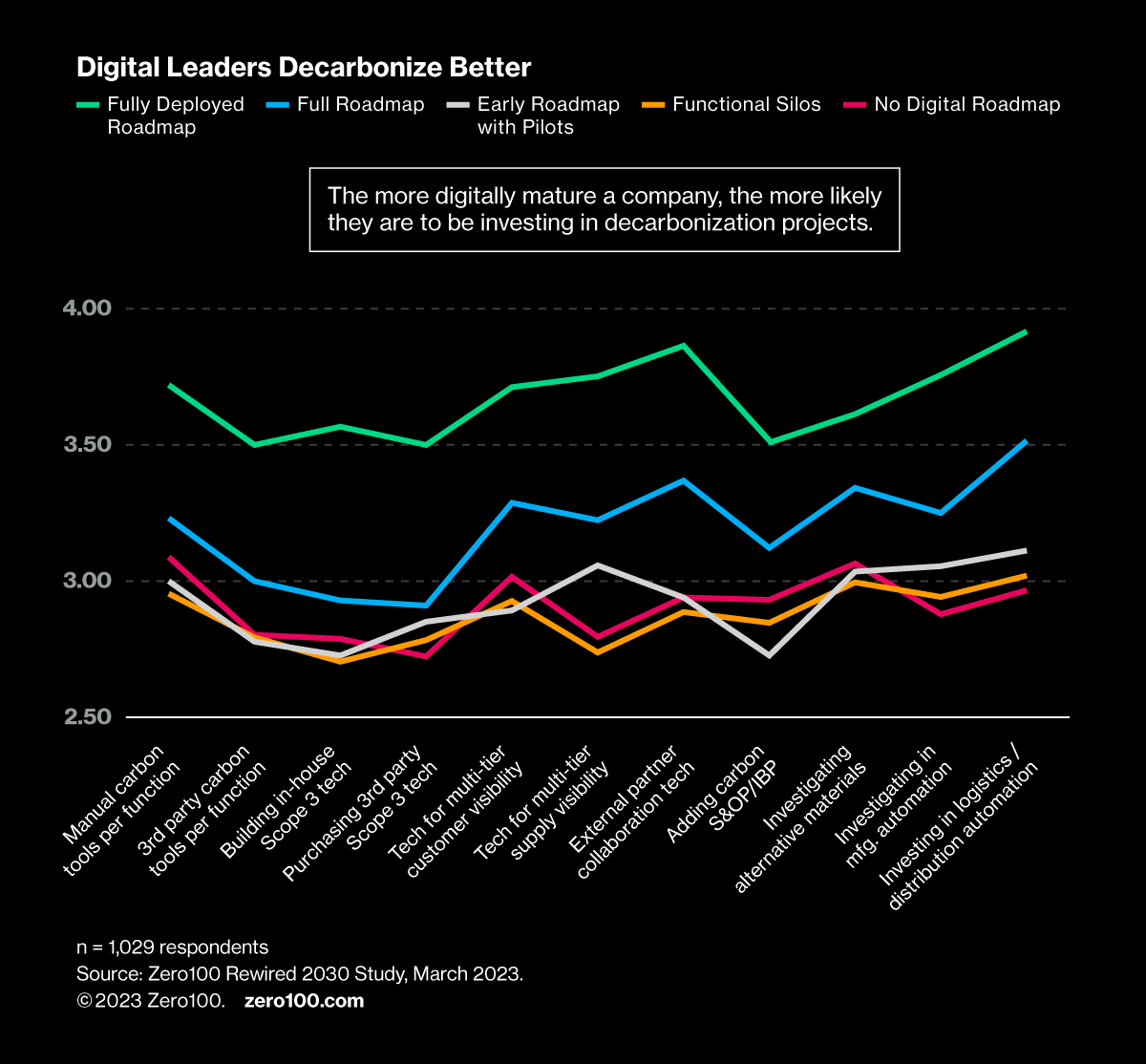

Zero100 research points to a powerful positive feedback loop between talent and tools. Organizations that lead in attracting supply chain practitioners with skills in software engineering, analytics, and AI are not only the ones that are best at using these tools to improve business performance but also the ones farthest ahead on using tech to drive decarbonization. The common ingredient is starting with select people who bring digital skills to the job and then letting their creativity loose to cut a path out of Excel-hell.

One such example is Gerardo Mora, a young business engineer at Unilever in Mexico. Gerardo’s story highlights the next-generation digital skills he brought to the job, namely making better use of data for forecasting, directly from university. As important as this is for forecasting, it also demonstrates the brilliant response of his boss, which was to encourage and leverage his work. The result was one more brick in the foundation of a dramatically better system of collaborative AI-based forecasting connecting Unilever and its biggest customer in Mexico – Walmart.

The tech partnership is called Sky, and its performance-improving fill rates and logistics efficiency are best-in-class. Equally important is the proof that Unilever approaches digitization deeply and that top young talent can expect to learn fast with huge datasets in a live environment. It is a statement of respect for both talent and tools.

It is also telling that Unilever and Walmart are visible leaders in the push for sustainable supply chains. This combination of purpose and professional growth is catnip to young people eager to learn and make a difference.

AI Learns from People as People Learn to Use AI

One of the biggest problems with ChatGPT and other widely used generative AI tools that operate on massive public data sets is known as “hallucinations,” which is essentially bad groupthink. The AI has no way of knowing when its answer clashes with common sense or higher intent. Expert humans train AI, differentiating right from wrong as it learns. In mirror image, this is what humans do as they learn to create better AI prompts and design better LLMs for AI to chew on.

AI tools, whether generative or discriminative (ie, older school predictive tools), are meaningless without a question to answer. People are asking questions, and as the machine gets better at giving answers, more decisions can be automated. In Unilever’s Sky program, billions of configurations are considered automatically, and hundreds of thousands of choices are made automatically, leaving the people who asked the original questions free to make calls on the few decisions that aren’t routine. “Decision intelligence” describes what’s happening here: people learn to lead the transformation as technology learns to follow people's lead.

What about Skynet and the Terminator?

Former Googler and legendary AI pioneer Geoffrey Hinton was interviewed recently on 60 Minutes and raised the alarm about whether humans should “protect themselves” from AI’s potential unintended consequences. His basis for concern is certainly justified, given the rise in processing speeds and exponential growth in training data sets.

As a layman, though, I wonder whether the best protection is also the most obvious, which is that we should all learn what AI can do. Maybe the right analogy is fire. When humans first learned to use fire, we must have worried about letting it get out of control. “Don’t play with fire” is a parental admonition that encapsulates thousands of generations of learning, but it doesn’t mean we ignore fire.

People First is about letting people like Didi from AT&T and Gerardo from Unilever lead the learning process for both humans and machines as we spin the flywheel faster.